A Practical Guide to Split Testing

Over the past decade, experience optimizers have crafted many strategies to effectively and efficiently increase conversions and overall business revenue gains. They’ve sought success through many of these strategies, including persona-based marketing, conversational marketing, social media, and viral marketing, celebrity endorsements, and more. But none has proved much useful or possessed the unicorn-like capacity to increase sales and sustain for long.

Download Free: A/B Testing Guide

Enter split testing.

Unlike other marketing techniques, split testing, otherwise called A/B testing, helps experience optimizers focus their energies and dollars in the right direction. It typically enables them to experiment with other marketing campaigns or strategies in real-time.

If you are an experience optimizer, you can seek your target audience’s opinion by showing them old and new versions of a website page or element you wish to change through split testing. You can also compare the experiment results to see which version shows better improvement and use the gathered data to make informed decisions on whether or not to go ahead and make the changes.

Split testing is, therefore, the method of conducting controlled, randomized experiments with the core objective of improving a website metric. These could be overall business conversions, click-through rates, number of purchases, form completions, and more.

Let’s get in-depth to fully understand the concept of split testing, its scope, how you can start implementing it, and tools that aid you in the process.

Simplifying the concept of split testing

The objective of split testing is to identify how a change to a web page, advertising campaign, or email will increase or decrease the chances of an outcome. For instance, let’s say you’re the owner of an online homemade chocolate venture. Your monthly website traffic is 50,000 visitors. As a marketing-savvy owner, the hypothesis you want to test is that customers are more likely to make a purchase when enticed with additional discounts or deals on all products, despite your brand being a renowned name in the market. To get certainty, you plan to run a split test.

You create a new version of your homepage banner, mentioning additional discounts that all visitors can avail on all products. The original homepage banner simply illustrates new products introduced in the current month with regular launch offers.

You float the test and make each version visible to equal traffic. 50% of the visitors see the old version (control), and 50% see the new version (variation). Running the test for a couple of weeks, you witness that your hypothesis was correct. People who saw the variation version of your test bought more chocolates from your website than those who saw your test’s control version.

The outcome? Split testing provides definitive conclusions about specific elements of your web page. It offers the swiftest way to find whether or not your planned business strategies or campaigns will showcase a positive result and help move the needle upward. Additionally, split testing also helps save time, money, and effort and gives results in a shorter timespan. You can see this for yourself by taking a 30-day all-inclusive free trial with VWO.

Which pages or page elements should you split test?

If you’re planning to run a split test on your site, there are a couple of logical places to start. Ideally, you should begin testing pages that add the highest value to your business.

Once you’ve identified these pages, deep dive into the page elements that you’d like to experiment with, which can impact your key metric. Typical page elements include, but are not limited to:

- Headline

- Feature/Banner image

- CTA

- Purchase button

- Form

- Testimonials

- Entire page design

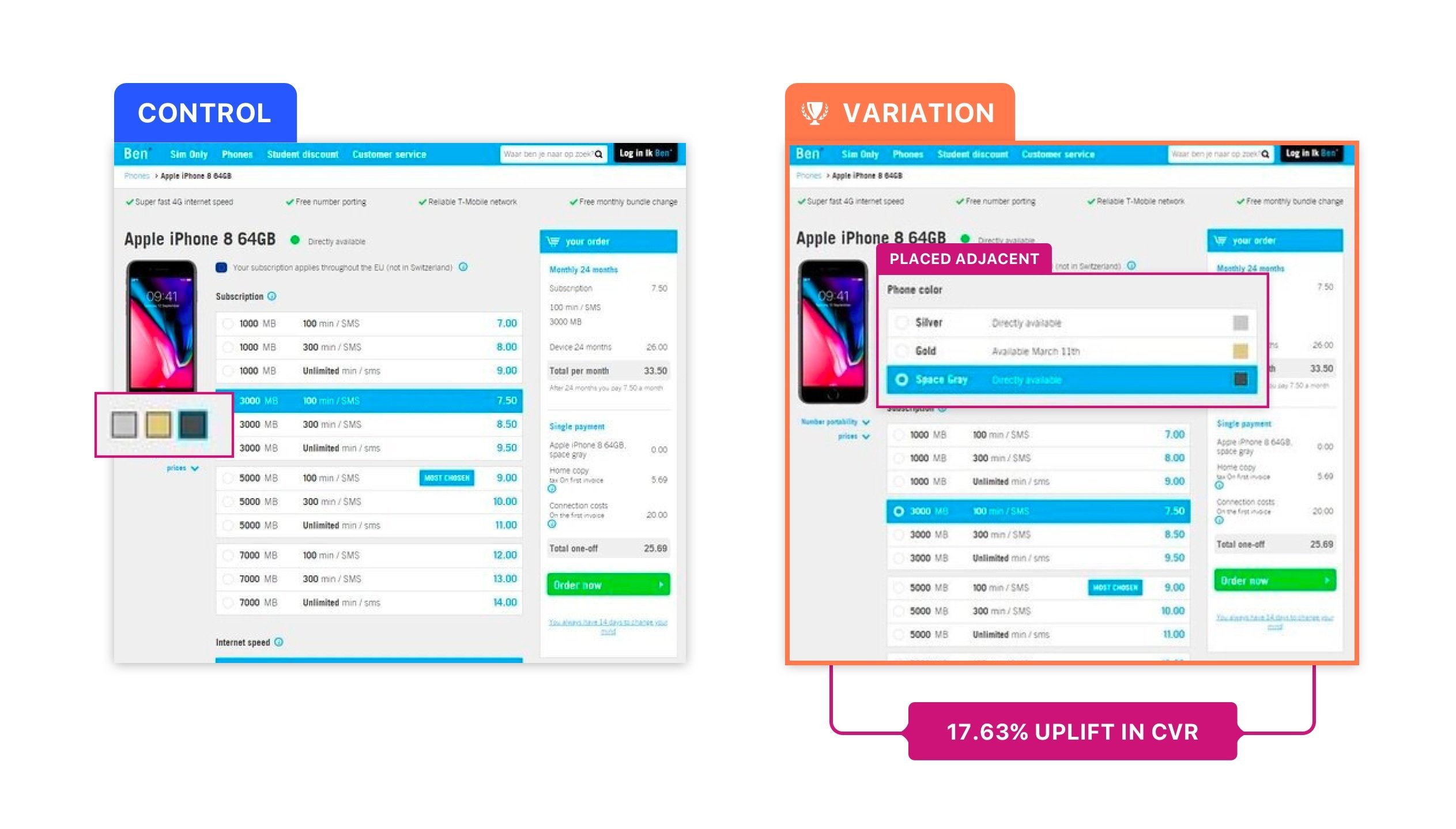

The example below from Ben, a Netherlands-based personal budget challenger of the Dutch telecom industry, nicely illustrates how a small change in a page’s element can significantly increase the conversion rate. The company ran a split test on its product pages to make the phone color prominently visible to the page visitors. The execs at the organization found that most people visiting the product pages were unaware that they could choose phone colors along with selecting the best data and voice plans for themselves. The data collected through VWO Insights also revealed that people did not notice the color palette given below the mobile image or couldn’t understand the palette’s basic function.

To make the section visible and selection easy, the execs at Ben shifted the color palette from below the mobile image and placed it adjacent to the product image.

Here’s how the control and the variation look:

Image Source: Ben

After running this test for about two weeks, the execs found that by merely making a slight change on their product page, they were able to increase their conversions by 17.63% and significantly reduce customer calls to change device colors as well.

Numerous other page elements can be tested that may increase the conversion rate.

What’s great about split testing is that it’s a universally applicable experimentation method. The experience optimizers can use this capability to examine any assumption or theory they or their colleagues come across, without any hesitation. Be it an eCommerce site, a social media campaign, an ad campaign, and so on, everything can be tested using the split testing methodology.

Statistical modeling: Bayesian vs. Frequentist approach

Before moving ahead to learn about how to run a split test, it’s essential to understand the statistical modeling on which all experiments run.

All split testing tools either work on the Frequentist or the Bayesian statistical model. In the case of the Frequentist model, the conclusion of an event is drawn based on how many times the outcome has occurred through the course of the experiment. A test using the Frequentist approach requires a larger sample size to ensure it shows a statistically significant conclusion. In short, you have to collect data in abundance. For a page or website with low traffic, this approach would require a lengthy testing phase.

On the other hand, the Bayesian statistical model is based on the likelihood of an outcome occurring. You don’t need the same number of visitors to predict likelihood. It’s a fundamental difference. Tests conducted on the Bayesian model can be run for a shorter time (as low as 50%) than the Frequentist model and get actionable findings for low traffic pages faster. Hence, the Bayesian model makes more sense and proves more useful for all types and sizes of campaign and strategy experimentations. We, at VWO, also use and advocate the Bayesian model over Frequentist.

Download Free: A/B Testing Guide

Getting started with split testing

When planning to run a split test, it’s always better to have a structured and well-crafted plan to make efforts more profitable. Here’s how to create an effective split testing campaign.

Research

Before building a split-testing plan, it’s better to conduct thorough research to understand the current performance of the page or element you’re planning to test. Collect all the relevant data using qualitative and quantitative data gathering tools around the test elements.

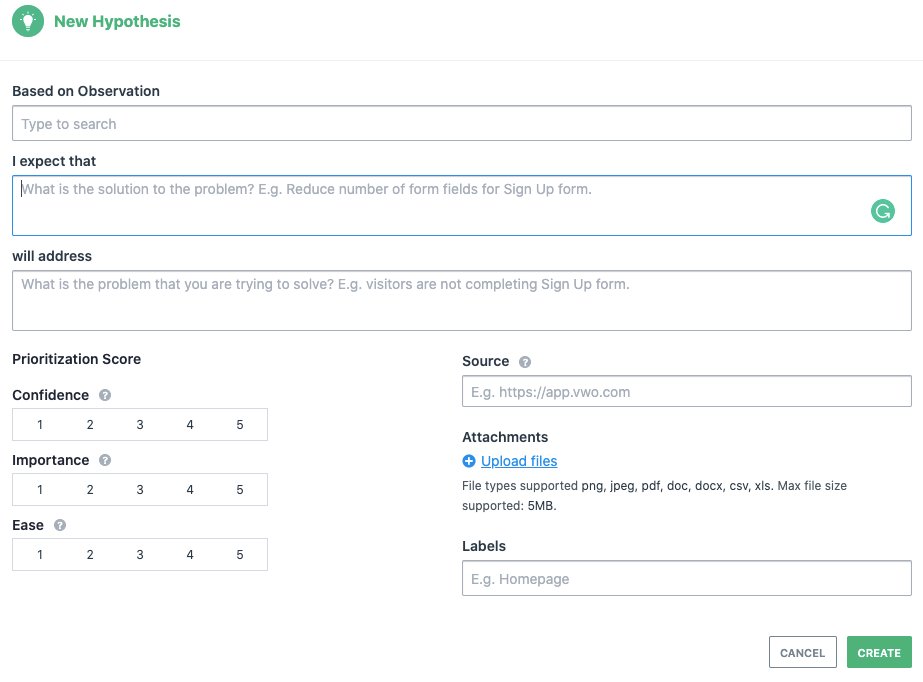

Observe and formulate hypotheses

Make keen observations around the gathered data and draw user insights to draft a data-backed hypothesis. Use the below-given hypothesis creation formula to write a good, thorough hypothesis. You must also test your hypothesis against various parameters, such as impact, confidence, and ease. Impact means the likeliness of your test to affect your key metrics positively. Confidence is your belief in the hypothesis proving true. Ease refers to how difficult or easy it will be to implement the changes and run the test.

Create variations

After drafting a data-backed hypothesis, create variations. With VWO’s powerful Visual Editor it is quick and easy to create variations that you wish to test against the original version. A variation is another version of your original or current version with changes that you want to test.

Run the test

Once you’ve locked variations that you want to test, set up the test. Kick off the test and wait for the stipulated time for the test to reach statistical significance. Please note that no matter what kind of test you run, let it complete its due course. If you stop the test in between, the chances of getting the wrong results will surely increase.

Result analysis and deployment

This is the step to analyze the results of the test. See if your variations have shown an uplift in the key metrics. In case your variation wins, implement the changes. Meanwhile, if your test loses, use the data to learn and make amendments to your future experiments.

How to choose a good split testing tool

When choosing a good testing tool, there are a couple of features and functions that you must consider. Look for an all-inclusive tool that’s intuitive and easy-to-use to develop new test versions and run tests as well. Make sure the tool you select enables you to:

- Gather and analyze qualitative and quantitative data and get in-depth information about how various business metrics are performing

- Run multiple tests in parallel but on isolated audiences to ensure none influences the results of one another

- Set up tests with minimum developer help

- Easily create hypotheses and test versions using its in-built features

- Use multiple widgets for testing and adding popups, banners, or other prompts to generate leads

- Speedily access accurate results to understand whether or not your hypothesis will show positive results

- Quickly deploy tests and map results throughout the testing process

VWO fits this bill perfectly. You can explore its capabilities by taking a 30-day free trial. You can also go through this list of recommended split testing tools.

Conclusion

No website owner or experience optimizer should ever rest on their laurels. They must always look for improvements and optimization techniques to ensure their websites and related campaigns perform better and consistently increase business revenue. Split testing is a vital means of making changes based on data rather than assumptions. Leverage it now and in the future.

![7 A/B Testing Examples To Bookmark [2023]](jpg/feature-image_7-ab-testing-examples-to-bookmark-2021a8d0.jpg?tr=h-600)