What is Multi-Armed Bandit optimization in CRO?

What happens in a traditional A/B test? There are usually two variants of a webpage, and visitors who become part of the test are split evenly between the two variants. One of these variants is declared a winner, and upon being deployed, all visitors see the winning variant of the page. However, before the deployment happens, a significant amount of traffic is pushed to the suboptimal variation, which is the underlying opportunity cost associated with traditional A/B tests. A user benefits from the best variation only after the winning variation gets deployed.

However, by using bandit-based algorithms, you can maximize your website’s output by continuously exposing the better performing variation to more and more visitors long before the test reaches statistical significance, reducing the opportunity cost associated with A/B testing. This is done by continuously comparing the performance of all variations and acting on the output reported by the comparison.

To understand this, imagine a time-constrained scenario: You want to maximize conversions on your website’s homepage banner, which talks about an upcoming sale, and you have several variations of this banner. Initially, a multi-armed bandit (MAB) algorithm shows each variation to an equal percentage of your website traffic. Eventually, it starts directing the traffic to the variation that is gaining more conversions. This way, the variation performing better at a given time starts getting more traffic, and in turn, your conversions get amplified.

Overview of VWO’s MAB algorithm built on Bayesian philosophy

For A/B testing, VWO uses the Bayesian-based SmartStats engine to determine which variation of your website is better and by how much. Our MAB algorithm dynamically changes the traffic split of all variations as per their performance and is also based on the Bayesian philosophy.

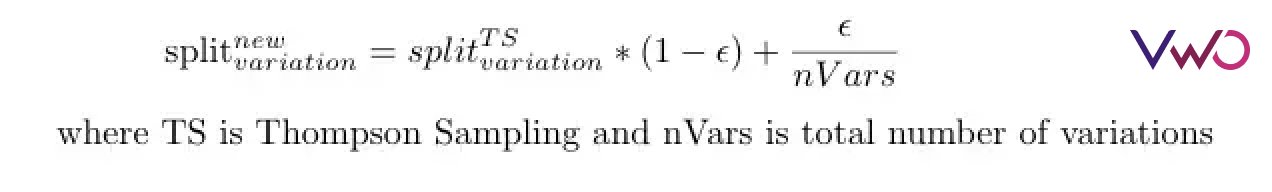

We use a combination of epsilon-greedy and Thompson sampling based on a multi-armed bandit approach for balancing exploration and exploitation while a test runs in bandit mode. Based on this strategy, we dynamically change the traffic split of all variations as per their performance and a small percentage of traffic committed for exploration. Here is a brief overview of the main components involved in our MAB algorithm –

- Weights initialization – As MAB starts, initialize all performance contributors (known as weights) to a variation with a normal distribution with mean 0 and std 1.

- Weights updation – Based on visitors/conversions obtained in each variation in a test, update the corresponding parameters of the weight distributions in a fixed time interval.

- Compute traffic split – Get traffic split using Thompson Sampling-based hill-climbing algorithm.

- Add exploration factor – Update the traffic split in the test after dedicating a fixed percentage of traffic for exploration.

With the suggested approach of incorporating weight distributions and their initialization, the initial traffic is distributed equally among all variations when the test begins. As the test gets more visitors, the traffic split is changed dynamically based on the performance of each variation, even as the test runs. This way, MAB starts displaying the better-performing variation to more and more visitors.

What do weights represent in our algorithm?

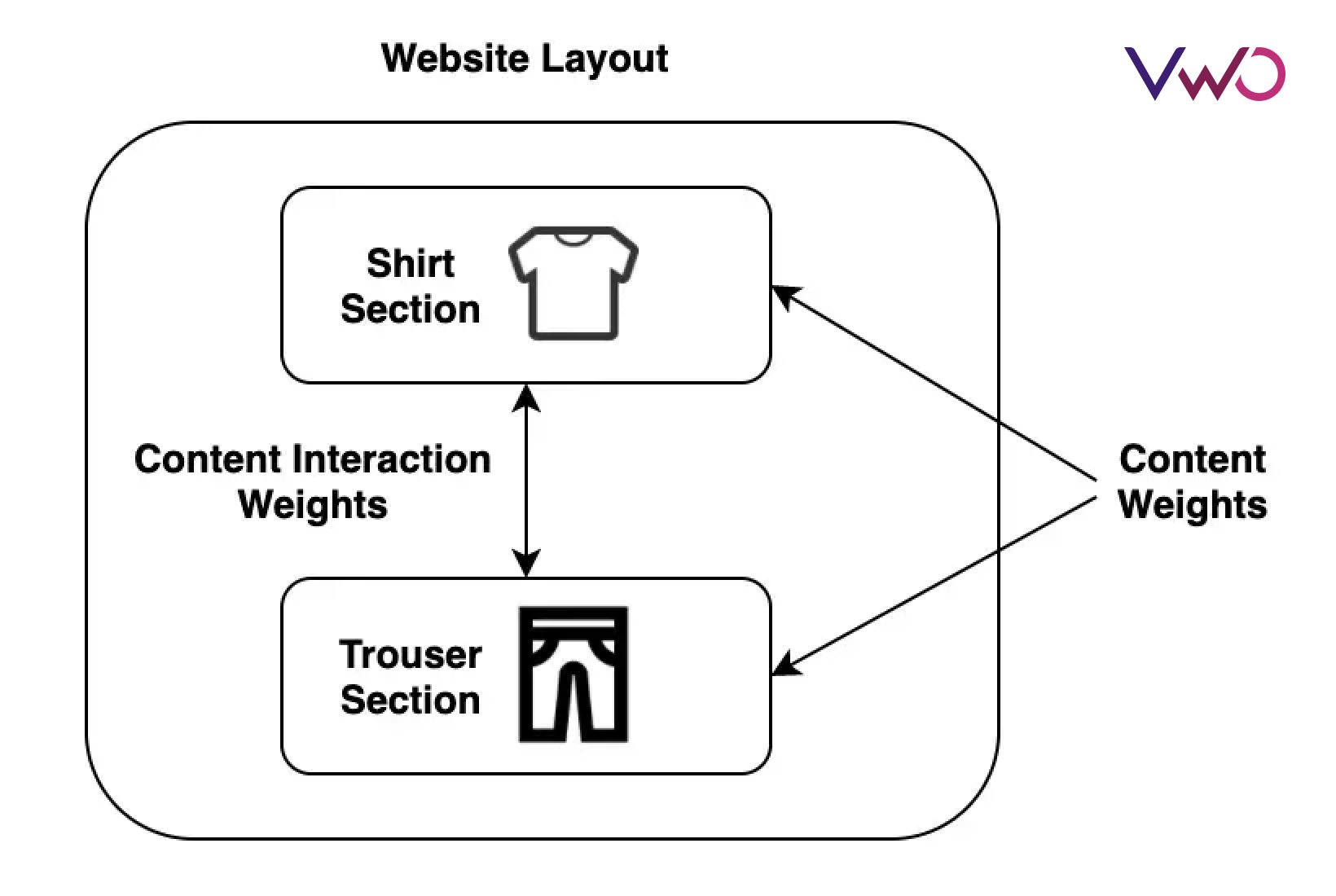

In this section, we’ll explain what factors are taken into account to quantify the performance of variations used in a test. Multiple factors determine the traffic allocation to each variation. These factors are:

- Content weight: Using content weights we model how an individual variant affects the conversion rate of the overall page layout.

- (In the case of a multivariate test) Content interaction weight: Using content interaction weights we model the combined effect of two variants in separate sections that affect the conversion rate of the overall page layout.

All weights associated with a variation’s page layout contribute towards its conversion rate or average revenue. By modelling weights as probability distributions, we capture uncertainty in our Bandit algorithm.

How are the weights learned?

The update algorithm we use works best if we know the sequence in which visitors land on your website. We use the Assumed Density Filtering algorithm to model a variation’s conversion rate/revenue and the message passing algorithm of Bayesian factor graphs to update its corresponding weight distributions. This approach has helped us model conversion rate/revenue in MAB analytically, helping us build a scalable solution for our customers.

The next section will explain how Thompson Sampling determines traffic split from the updated weight distributions.

The intuition behind Thompson Sampling

Thompson Sampling is essentially an explore/exploit optimization strategy where one tries to minimize conversion loss while exploring new variations. The core idea behind the approach is that the experimental design exploits the Bayesian methods to constantly update belief distributions based on new knowledge, such that the overall regret can be minimized.

Suppose you have to choose between two variations, A and B, for your home page banner. You have a prior belief that variation A resonates well with your audience. Let’s assume you have quantified your belief that for every 100 visitors viewing variation A, 20 will click on it, and 10 will click on variation B. According to this, when a visitor visits your website, you toss a biased coin that has a 66% chance of a head vs. 34% of a tail. If a head comes, then the visitor is shown variation A otherwise, variation B.

A logical question could then be – why not show every visitor variation A since it is more beneficial? The answer is that the effectiveness depends on your belief which needs to be validated by hardcore evidence or data. The critical aspect of Bayesian thinking is that belief could be made a part of the research, and this belief gets updated with evidence.

Determining traffic split from weights using Thompson Sampling

Once we have all the updated weights (beliefs learned from data), we use them to determine the traffic split for all variations in the test. This happens by running a visitor simulator where we simulate, say 10K visitors, and use a Thompson Sampling-based hill-climbing algorithm to determine which variation will be the best one to show to each of those visitors. By computing the winning proportion of all variations we obtain the traffic split based on the weights.

Adding an exploration factor to the traffic split of MAB

So far, the model assumes all variations to have a fixed conversion rate and average revenue, so after obtaining many data points, learning would come to a halt, and the model will run in full exploitation mode. Therefore, to avoid convergence of a model to a single variation we use epsilon-greedy along with Thompson Sampling to determine traffic split. We adjust the traffic split by considering a fixed epsilon factor for exploration.

Summary

In this article, we provided the overall view of the different components involved in the multi-armed bandit algorithm we designed at VWO. You can request a demo with our product experts if you’d like to explore this in detail.

Bandit algorithms are suitable for optimizers who care more about maximizing a metric in a short time and less about statistical significance. These algorithms are practical for rapid experimentation because they concentrate on actions that eventually lead to the greatest potential reward. This class of algorithms maintains a balance between exploration and exploitation to maximize reward while trying suboptimal decisions before finding the optimal one.